Testing network limits with collaborative music 🌿🎼🎹

The pandemic year 😷 was hard on all of us. Lockdowns and travel restrictions have not only affected our own music classes and artistic research, but also profoundly changed music performance all over the world. The problem was that musicians could not meet in the same room to play music with each other. But, one thing was clear: music needed to be made. So, musical performance had to be moved to the network. The result of this was proliferation of software for networked music.

While networked performance —somewhen called telematic performance

— has been around for at least 10 years, it was not until very recently that the audio dev community turned its attention to facilitating new tools for performers to play music together over home Internet connections. I like to think of this move to the network as a media replacement: from air (the preferred medium of musical sound) to electronic (networked music performance media)

🗣 ➡️ 🎤↗️🌎🌍🌏↘️ 🎧 ➡️ 👂

Networks became a new performative space. We have a new opportunity to rethink music and music performance research. For example, we can think of an explosion of networked music performance, with its counterpart being an implosion of sound into the electronic medium. The limits of what we can do in the midst of this huge bang of networked media need to be explored.

1002: A Case Study 📚 🤖

Together with Federico Ragessi, we created 1002

1002

is a case study of a collaborative networked performance created using a custom software called CollidePd. The work is meant to be streamed live on a web page that turns the listener into a performer. On this website, performers connect remotely from mobile devices 🤳or computers 💻 , controlling sounds 🔊 from the same music piece they are listening to. In this way, 1002

is an invitation to anonymous collaboration in real time with remote performers, without any required musical training 🤯.

The sonic research of this performance is based on the sonification of the musical gesture mediated by the mobile device, emphasizing the participatory movement of the performers.

The tech

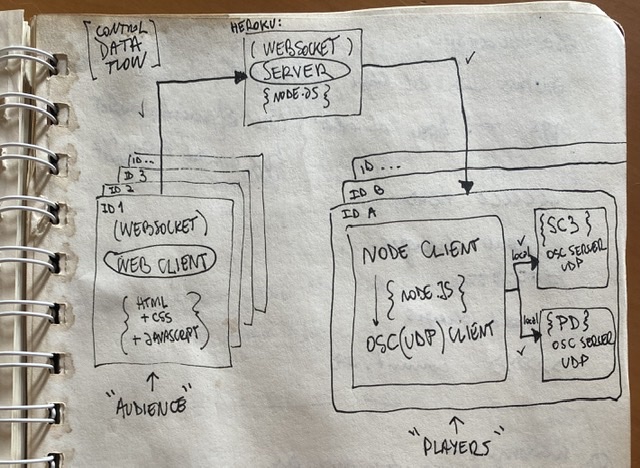

The platform is based on a client-server architecture, with a server written in node.js hosted in heroku and two types of clients, one for web written in javascript and another, local, written in node.js.

Here's a diagram

The local clients are two, each receiving and sonifying with Pure Data or Supercollider as sound engines the data stream from up to 1000 web clients. In this way, web participants choose whether or not to play the same work they are listening to, making this a collaborative and anonymous performance.

Note that these two local clients are optional. The real activity happens online.

The visuals

On the web page there is a visualization of the movement made with the mobile device using yet a separate client that grabs all data from players.

To be continued… (I'm tired and I'll go to sleep 😴)